AI Ethics: Who Is Responsible for Machine Actions?

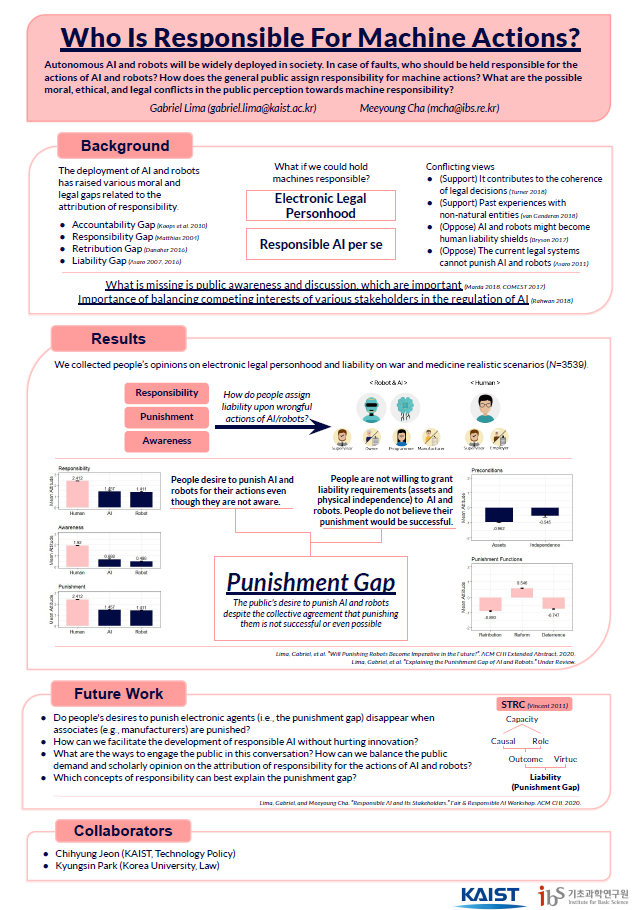

Considering the far-reaching consequences of the deployment of artificial intelligence (AI) and robots in the future society, we are interested in people’s perception of the duties and rights of AI and robots under the situations where these electronic agents’ actions have negative consequences.

Imagine a human doctor whose wrong diagnosis led to the death of a patient. The human doctor could be held responsible both legally and morally. What if the doctor in question was an AI? Can AI be held responsible for its actions? How can the AI doctor be punished?

“Who is responsible for machine actions?”

The current legal system considers two main entities for responsibility: the manufacturer and the user. Take as an example the accident of an Uber autonomous vehicle that led to the death of a pedestrian. The company responsible for the AI behind the wheel, Uber, settled and took responsibility for it1. This setup, however, raises the question of whether such decisions are legally and morally coherent, effective, and scalable2.

Scholars and the public sector have debated various solutions to these responsibility gaps. A prominent, yet controversial, possibility would be to change our social and legal landscape and treat AI and robots as entities that could be held responsible for their own actions. How would society and legal systems hold automated systems responsible for their actions? Can electronic agents themselves pay for damages directly? Is it possible to punish electronic agents? All these questions are related to the concept of electronic personhood.

“How can society and legal systems hold electronic agents responsible for their own actions?”

Solving these responsibility issues of automated agents has tremendous implications for the future society. Public opinion, however, is yet to be collected on this issue. Regulators and scholars must take into consideration what the public thinks about these responsibility questions, or at least be prepared for the public backlash that may arise3. This is where our research comes in. Our goal is to make the public aware of this discussion and collect the public perception of how responsibility for machine actions should be assigned across all entities involved, including the machine itself.

For a glance at our current work, have a look at our papers:

- “Will Punishing Robots Become Imperative in the Future?” Lima, Gabriel, et al. ACM CHI Extended Abstract. 2020. [PDF]

- “Responsible AI and Its Stakeholders.” Lima, Gabriel, and Meeyoung Cha. Fair & Responsible AI Workshop. ACM CHI. 2020. [PDF]

- “Explaining the Punishment Gap of AI and Robots.” Lima, Gabriel, et al. Preprint. ArXiv. [PDF]

This research project is led by Gabriel Lima (gabriel.lima@kaist.ac.kr), a researcher in IBS, who is working closely with Meeyoung Cha, Chief Investigator of the IBS Data Science Group. Some of the collaborators in this project are Chihyung Jeon, assistant professor at KAIST, and Kyungsin Park, professor at Korea University.

- https://www.npr.org/sections/thetwo-way/2018/03/29/597850303/uber-reaches-settlement-with-family-of-arizona-woman-killed-by-driverless-car

- Turner, Jacob. Robot rules: Regulating artificial intelligence. Springer, 2018.

Matthias, Andreas. “The responsibility gap: Ascribing responsibility for the actions of learning automata.” Ethics and information technology 6.3 (2004): 175-183.

Danaher, John. “Robots, law and the retribution gap.” Ethics and Information Technology 18.4 (2016): 299-309.

Chopra, Samir, and Laurence F. White. A legal theory for autonomous artificial agents. University of Michigan Press, 2011. - https://cacm.acm.org/magazines/2020/3/243030-crowdsourcing-moral-machines/fulltext